Gradient looking at is actually a numerical approach that helps verify in the event that backpropagation gradients usually are being computed appropriately, particularly in personalized layers or loss functions.

Deep learning is really a powerful tool along with applications across varied fields, but creating neural networks generally involves complex code and large datasets, making debugging a challenging task. Mistakes in neural systems can vary from simple syntax mistakes to be able to intricate issues that will impact performance subtly. This guide can explore effective strategies for debugging neural sites, helping developers discover and resolve popular coding errors to enhance model accuracy and efficiency.

1. Understanding the Basics of Nerve organs Network Debugging

Nerve organs network debugging requires identifying, diagnosing, in addition to fixing issues that hinder a model’s performance. It calls for a mix of coding skills, the understanding of neural network architecture, and the ability in order to interpret results. Here are some crucial types of errors encountered in nerve organs networks:

Syntax errors: Common coding faults such as typos, incorrect imports, or info type mismatches.

Rational errors: Issues found in model architecture or training process, frequently producing unexpected behaviour.

Numerical errors: Difficulties as a result of operations this sort of as matrix multiplications or calculations including extremely large or small numbers, which can lead to overflow or underflow.

Simply by using a structured method, you can systematically determine these errors, separating the actual cause plus making certain the network functions as designed.

2. Common Concerns in Neural Systems as well as how to Identify All of them

a. Incorrect Model Structure

Errors inside of the type of a new neural network architecture can lead to be able to poor performance or failure to train. To debug these kinds of issues:

Verify the particular input and outcome shapes: Make certain that every layer receives advices and produces results in the expected dimensions. Use model summary functions (e. g., model. summary() in Keras) to examine layer-by-layer output styles.

Check layer compatibility: Ensure that every single layer is compatible with the subsequent. For example, the common mistake is usually placing a thick (fully connected) level after a convolutional layer without flattening the info, leading in order to a shape mismatch error.

Solution: Make use of debugging tools such as model summaries, and add print out statements to exhibit intermediate layer outputs. For instance, throughout PyTorch, inserting a print(tensor. shape) line after each coating can help track down shape mismatches.

b. Vanishing plus Exploding Gradients

Full networks can suffer from vanishing or perhaps exploding gradients, making training slow or even unstable. This trouble frequently occurs in frequent neural networks (RNNs) and incredibly deep sites.

Vanishing Gradients: Any time gradients become too small, layers are unsuccessful to update properly, leading to wachstumsstillstand.

Exploding Gradients: If gradients become excessively large, model variables can diverge, inducing instability.

Solution: Employ gradient clipping for exploding gradients and try gradient running techniques. Additionally, using advanced optimizers (e. g., Adam or perhaps RMSprop) and correct weight initialization methods (e. g., Xavier or He initialization) can help preserve gradient stability. Monitoring gradient values during training helps to quickly spot these types of issues.

c. Overfitting and Underfitting

Overfitting occurs when the model performs well on training files but poorly on validation data, showing they have memorized instead than generalized. Underfitting occurs every time an unit performs poorly upon both training plus validation sets, indicating it hasn’t learned patterns effectively.

Practitioner or healthcare provider Overfitting and Underfitting: Plot training plus validation loss/accuracy. In the event that training loss is constantly on the decrease while acceptance loss rises, typically the model is likely overfitting. Conversely, in case both losses remain high, the model could be underfitting.

Answer: Use regularization approaches like dropout, pounds regularization (L2), plus data augmentation for overfitting. For underfitting, increase model intricacy, try more superior architectures, or enhance hyperparameters like learning rate and group size.

3. Debugging Training Process Errors

a. Monitoring Reduction and Accuracy and reliability

Loss and accuracy provide essential clues intended for diagnosing problems inside training. Here’s how to leverage all of them:

Sudden spikes inside loss: If loss values unexpectedly spike, check for issues in data batching, learning rate options, or potential numerical instability.

Flat reduction curve: If typically the loss doesn’t cut down over epochs, right now there could be issues with learning price (too low) or perhaps data normalization.

Answer: Log loss in addition to accuracy metrics at each epoch and visualize them. When using high learning rates, consider reducing them gradually or even using a learning rate scheduler in order to dynamically adjust typically the rate.

b. Checking for Data Seapage

Data leakage arises when information from the test fixed inadvertently enters the training process, leading to be able to overly optimistic outcomes during evaluation yet poor generalization.

Determining Data Leakage: Evaluate training and analyze accuracy. If teaching accuracy is substantially higher, verify that test data is usually not incorporated into education. Cross-check preprocessing pipelines to ensure you cannot find any overlap.

Solution: Cautiously manage data cracks and apply preprocessing separately to education and testing units. Regularly review data handling code in order to avoid leakage.

4. Equipment and Techniques for Neural Network Debugging

Modern deep studying frameworks offer solid debugging tools. Here’s a failure of some popular techniques:

the. Using Visualization Tools

TensorBoard: TensorFlow’s visual images suite that will help monitor various metrics such as damage, accuracy, and lean norms. Use it in order to the training process instantly.

Weight load & Biases, Neptune. ai: Advanced equipment for experiment checking that allow an individual to visualize metrics and compare designs across runs.

Software: Use these tools to be able to compare results across different model architectures, hyperparameters, and training sessions. Visualization helps area anomalies and tendencies in performance, making it simpler to identify main causes.

b. Obliquity Examining

Gradient looking at is actually a numerical approach that helps verify in the event that backpropagation gradients usually are being computed appropriately, particularly in personalized layers or loss functions.

How It Works: By approximating gradients using finite differences, you can easily compare analytical plus numerical gradients to spot discrepancies.

When to Use: Should you suspect that custom made layers or alterations are causing mistakes, gradient checking can certainly validate that gradient are correctly computed.

Solution: Manually carry out gradient checking with regard to custom components or perhaps use libraries along with built-in functions.

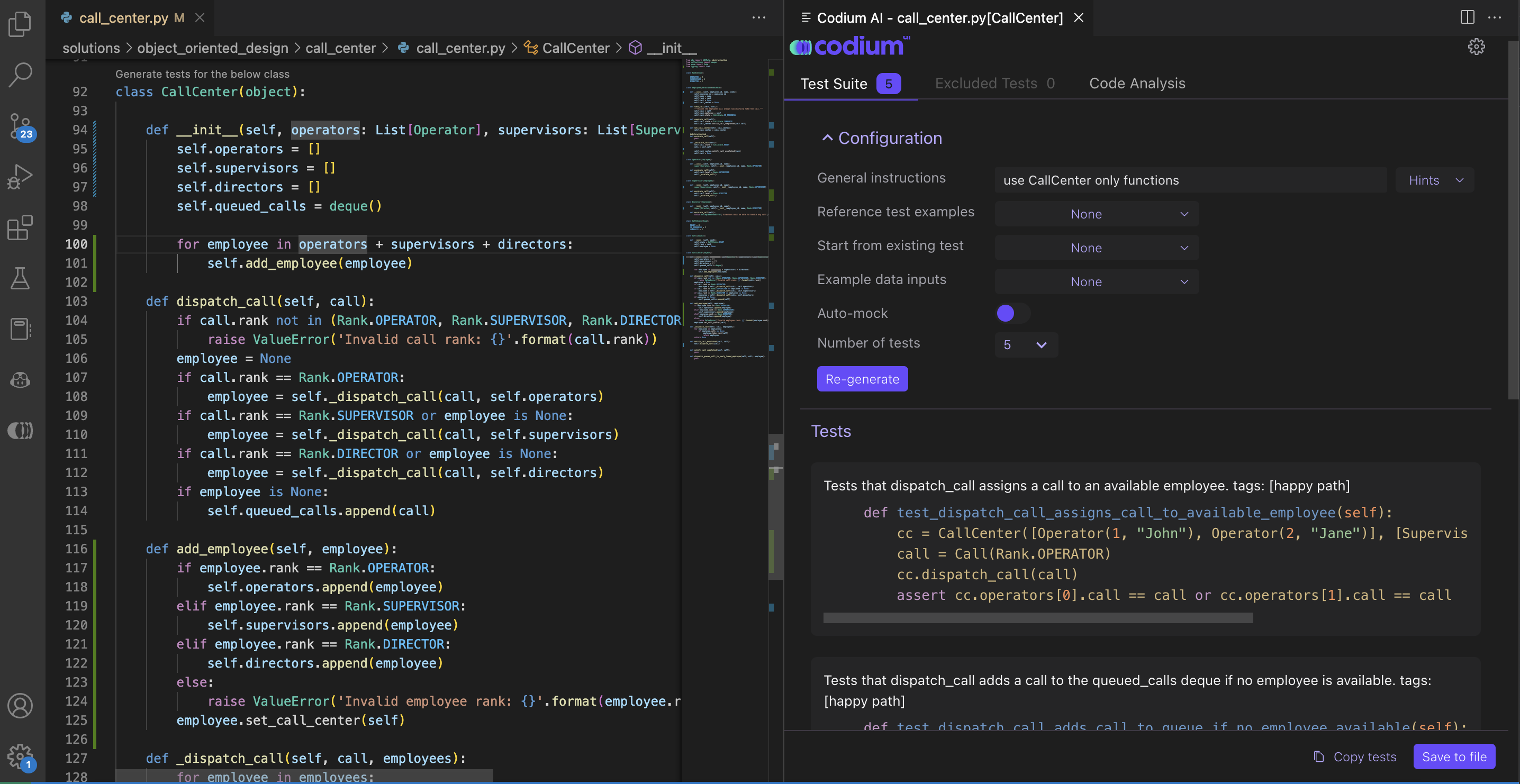

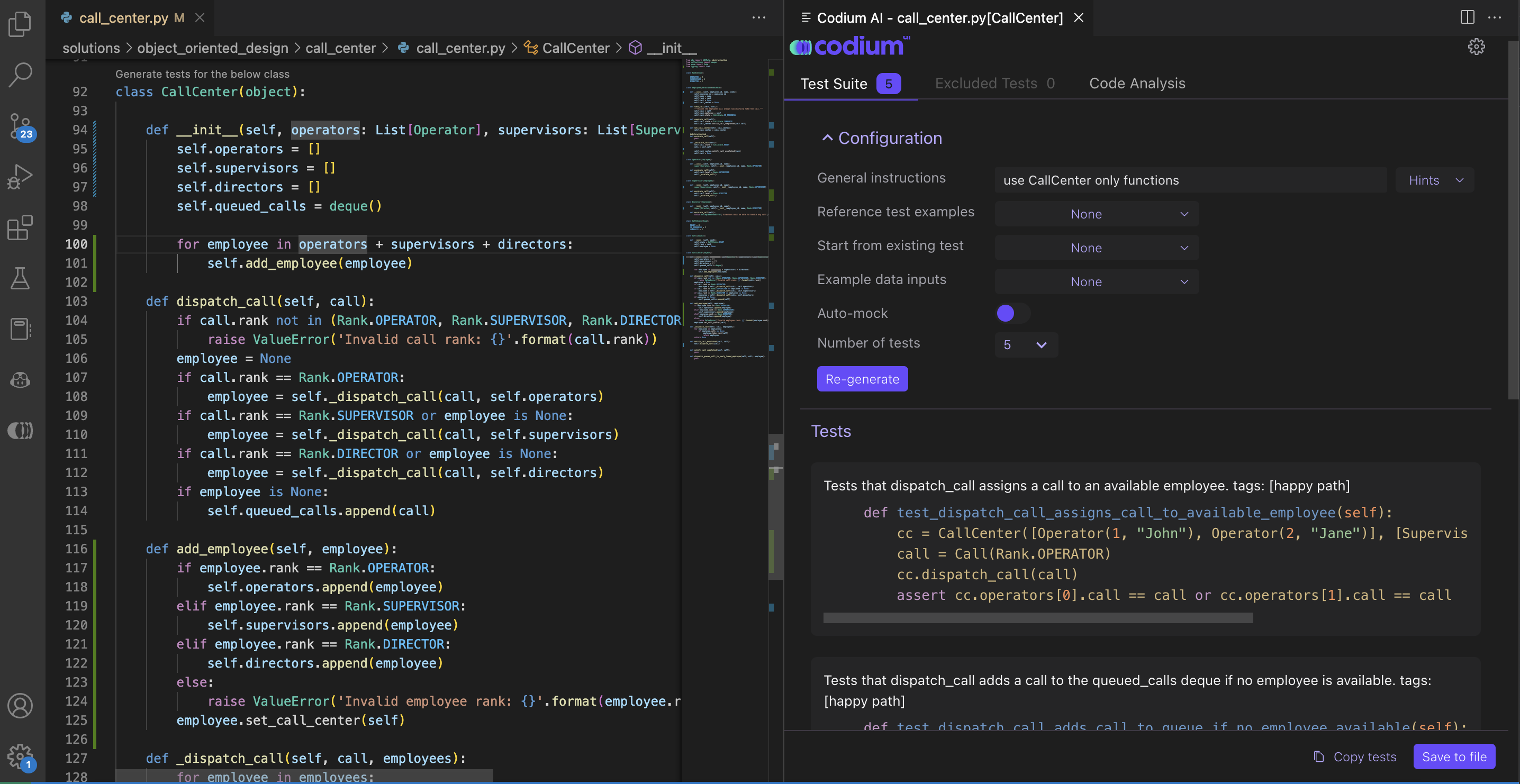

c. Unit testing and Unit Confirmation

Incorporating device tests inside the signal can help catch specific bugs in the layer level.

Apply Layer-wise Testing: Generate tests for every layer or custom made function to validate that they can operate while expected.

Verify Information Flow: Be sure files flows correctly by layer to coating. Confirm that files is not being inadvertently modified by debugging intermediate beliefs.

Solution: Use product testing frameworks like pytest in Python to build small assessments for every single network part.

5. Debugging Resources in Popular Frames

a. TensorFlow and Keras Debugging Tools

TensorFlow and Keras offer numerous resources for debugging:

tf. print and tf. debugging: Functions just like tf. debugging. assert_* allow you to add custom assertions, catch NaN principles, and be sure that tensors remain within expected ranges.

Source : Save model states at various education points, allowing a person to revert in order to known working variations if an error occurs.

b. PyTorch Debugging Tools

PyTorch also provides helpful debugging features:

flashlight. autograd: Use torch. autograd. set_detect_anomaly(True) in order to identify problematic operations by tracking backward passes.

Hooks: Register hooks to examine gradients and outputs associated with individual layers, helping to make it easier to be able to locate gradient issues.

6. Preventive steps and Best Practices

Proactive debugging can reduce potential issues found in deep learning projects:

Automate data bank checks: Establish scripts of which validate data types, dimensions, and beliefs before feeding them into models.

Work with version control: Keep track of model architectures, hyperparameters, and code modifications with tools such as Git.

Document experiments: Document each training session, noting the structure, hyperparameters, and just about any peculiar observations. This helps when comparing results or revisiting previous steps.

Conclusion

Debugging neural networks could be challenging, yet a structured approach and the right tools make it manageable. By knowing common error sources—such as model architecture issues, training instabilities, and data leakage—developers can identify in addition to resolve errors systematically. Using a mixture of visual images tools, gradient investigations, unit tests, and framework-specific debugging functions, developers can improve their models’ performance, attain greater stability, and optimize for effectiveness.

Debugging is the iterative process of which not only helps to enhance the model but also deepens one’s understanding involving neural networks. Like developers gain encounter in troubleshooting, that they become more adept at creating resilient and robust deep understanding systems that succeed across diverse tasks.