System integration screening (SIT) is crucial in any application development project, but it really becomes even even more vital in the particular context of AI projects. The unique attributes of AI methods, such as their very own reliance on complicated algorithms, vast data inputs, and changing models, present distinctive challenges for the use testing. In this article, we will explore best practices intended for system integration testing in AI assignments and provide tactics for ensuring accomplishment.

Understanding System Incorporation Testing in AI Projects

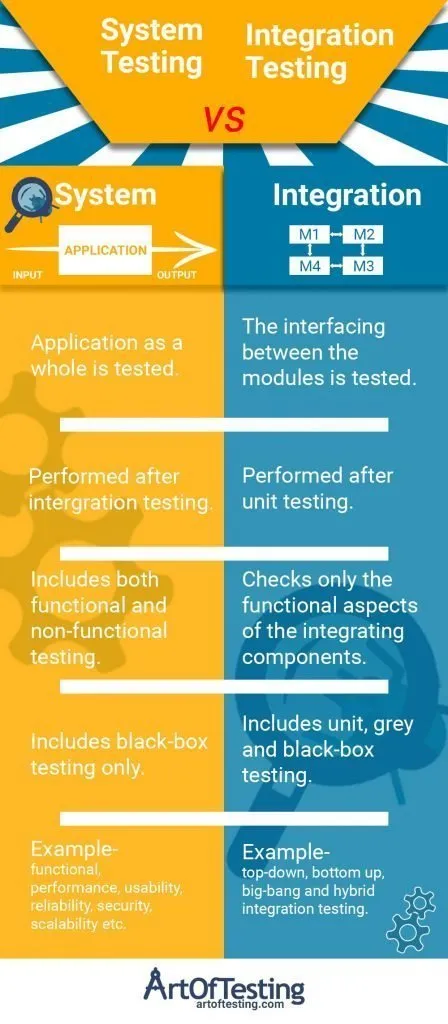

System the use testing involves analyzing how different pieces of a technique communicate. In AI projects, this includes testing how AJE models, data sewerlines, user interfaces, and external services communicate. The primary objective is to identify and address problems that arise any time these components will be integrated, ensuring that the entire system functions as expected.

Best Practices for Program Integration Testing inside AI Projects

Define Clear Objectives and even Requirements

Prior to starting the usage testing, it’s essential to establish very clear objectives and needs. This includes knowing how the AJE models interact with other system components and exactly what outcomes are predicted. Define success standards to the integration process and ensure all stakeholders agree with these goals. This clarity helps slowly move the testing procedure and sets a new baseline for evaluating results.

Develop Complete Test Ideas

The well-structured test prepare is vital intended for effective integration testing. The plan need to include:

Test Opportunity: Define which elements and interfaces can be tested.

Analyze Cases: Develop analyze cases that include various integration scenarios, including edge circumstances.

Test Data: Identify the data required for testing and ensure it reflects real-world problems.

Test Environment: Set up an atmosphere that mimics typically the production environment because closely as is possible.

A comprehensive test strategy helps ensure of which all relevant cases are covered and provides a plan for the tests process.

Use Genuine Test Data

AI systems often depend on large volumes of prints of data intended for training and inference. For integration assessment, it’s crucial in order to use realistic check data that accurately represents the data the system will certainly encounter in creation. This includes info with different characteristics, these kinds of as varying platforms, volumes, and high quality. Realistic test info helps identify problems that might not be apparent along with synthetic or incomplete data.

Test with regard to Interoperability

In AJE projects, different parts, such as machine learning models, APIs, and databases, need to work together seamlessly. Test for interoperability by evaluating just how well these parts interact and swap data. This consists of checking API endpoints, data formats, and communication protocols. Guaranteeing interoperability helps in avoiding concerns that might happen when integrating diverse system components.

Apply Continuous Integration plus Continuous Testing

Constant Integration (CI) in addition to Continuous Testing (CT) are practices that will help maintain the top quality of software through the development lifecycle. Regarding AI projects, incorporate testing into typically the CI pipeline to ensure that integration tests are accomplished regularly. Automate analyze execution where probable to quickly discover and address concerns. Continuous testing will help catch problems earlier and reduces the risk of defects in the final product.

Confirm AI Models within the Integration Framework

AI models are usually a central component of AI projects, and the integration with various other system elements needs special attention. Validate of which the AI designs function correctly within just the integrated method and meet performance expectations. This consists of:

Model Accuracy: Guarantee the model’s forecasts or outputs are usually accurate and constant.

Performance: Test typically the model’s performance underneath different conditions plus loads.

Integration Items: Verify that typically the model integrates easily with other products, such as APIs and databases.

Conduct End-to-End Testing

End-to-end testing involves tests the complete system by seed to fruition to guarantee that all pieces work together as intended. For AJE projects, this indicates validating the flow of data through ingestion through control, modeling, and end result. End-to-end testing will help identify issues of which will not be apparent within isolated component tests and ensures of which the integrated method meets all demands.

Monitor and Evaluate Test Outcomes

Checking and analyzing test out results is essential regarding identifying and dealing with issues. Use supervising tools in order to check execution and record metrics for example delivery time, error costs, and performance benchmarks. Analyze test results to pinpoint the basic reasons for failures and even make necessary adjustments. Regularly reviewing test results helps boost the overall good quality of the method and informs upcoming testing efforts.

Tackle Scalability and Overall performance

AI systems often handle large volumes of prints of data and even require significant computational resources. Test find and functionality of the integrated program to make certain it could handle anticipated lots and data volumes of prints. Evaluate how effectively the system performs under stress plus identify any bottlenecks or performance concerns. Ensuring scalability and even performance helps preserve a high-quality end user experience and technique reliability.

Incorporate Suggestions and Iterate

Incorporation testing is an iterative process. Incorporate comments from testing levels to refine and even improve the technique. This includes responding to any issues discovered during testing, upgrading test cases, and revisiting integration factors. Iterative testing allows boost the system’s general quality and guarantees that it complies with user expectations.

Realization

System integration tests is a critical aspect of AJE projects, ensuring that will all components job together seamlessly to supply a functional and reliable system. Simply by following guidelines such as defining clear objectives, developing complete test plans, employing realistic test files, and implementing continuous integration and screening, you can boost the effectiveness involving your integration screening efforts.

Effective integration testing in AI projects involves validating the performance and even interoperability of AI models, conducting end-to-end testing, monitoring plus analyzing test results, addressing scalability and gratification, and incorporating comments. By applying these kinds of strategies, you can ensure the achievements of your current AI projects and deliver high-quality options that meet user needs and expectations.