In latest years, the climb of AI-driven computer code generators has revolutionized the software development industry. These tools, powered by innovative machine learning designs, can automatically create code snippets, features, as well as entire courses according to user inputs. As their usage will become more widespread, typically the need for thorough performance testing of those AI code generator has become significantly critical. Understanding the particular importance of efficiency testing in AI code generators is essential for ensuring that they are trusted, efficient, and capable of meeting the demands of real-world software development.

The Evolution of AJE Code Generators

AI code generators, like OpenAI’s Codex plus GitHub Copilot, are built on large dialect models (LLMs) which have been trained on large numbers of code through various programming different languages. These models can understand natural terminology prompts and convert them into efficient code. The guarantee of AI program code generators lies inside their ability to be able to accelerate development operations, reduce human mistake, and democratize coding by making it more accessible to non-programmers.

However, using great power arrives great responsibility. As these AI equipment are incorporated into growth workflows, they need to end up being subjected to efficiency testing to ensure they deliver the expected outcomes without limiting quality, security, or even efficiency.

What is Overall performance Testing?

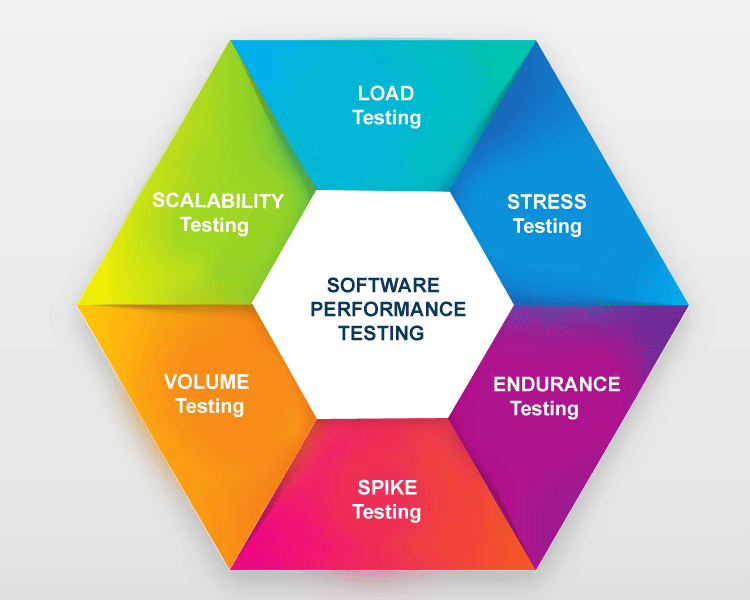

Performance testing is a essential aspect of software testing that examines how a system works under various problems. It calls for testing the speed, responsiveness, stability, and scalability associated with a system. Within the context of AJE code generators, performance testing assesses exactly how well these equipment generate code underneath different scenarios, including varying levels of input complexity, different programming languages, and even diverse user requirements.

Performance testing generally includes several kinds of assessments:

Load Testing: Decides how the AJE code generator works under a specific load, such because generating code intended for multiple users together.

Stress Testing: Examines the system’s habits under extreme problems, such as making complex or significant volumes of signal in a quick period.

Scalability Tests: Assesses the AJE code generator’s capacity to scale up or down depending on user demands.

Balance Testing: Checks if the tool can constantly generate accurate and even functional code over extended periods with no degradation.

Why Efficiency Testing is Essential for AI Computer code Generators

1. Making sure Code Accuracy plus Quality

One of the primary problems with AI-generated signal is accuracy. Whilst AI models could produce syntactically appropriate code, the logical correctness and adherence to best techniques are not guaranteed. Performance testing helps identify instances in which the AI signal generator may generate incorrect or poor code, allowing designers to refine typically the tool and reduce the particular likelihood of errors inside the final product.

2. Evaluating Effectiveness and Speed

Throughout software development, time features the importance. AI code generator are often applied to improve the particular coding process, but if the tool itself is slow or inefficient, that defeats the objective. Performance testing actions the time it will require for the AI to create code within various scenarios. By doing so, Get the facts can identify bottlenecks and optimize the particular tool to ensure it delivers code swiftly and effectively, even under higher demand.

3. Scalability Concerns

As AI code generators acquire popularity, they need to manage to handle increasing numbers of users and more intricate tasks. Performance tests assesses the scalability of the tools, ensuring they will expand their very own capabilities without compromising on speed or accuracy. This is definitely especially important in enterprise environments exactly where AI code power generators might be integrated into large-scale growth workflows.

4. Resource Utilization

AI types require significant computational resources, including digesting power, memory, and even storage. Performance tests helps evaluate how efficiently an AI code generator employs these resources. Comprehending resource utilization is crucial for optimizing typically the deployment of AJE tools, particularly within cloud-based environments where cost efficiency is definitely paramount.

5. Customer Experience and Responsiveness

For AI computer code generators to be effective, they have to provide a seamless end user experience. Performance assessment evaluates how reactive the tool is to user inputs and how quickly it can generate code structured on those advices. A laggy or perhaps unresponsive tool can frustrate users and diminish productivity, making performance testing important for maintaining an optimistic user experience.

Issues in Performance Tests of AI Program code Generators

Performance assessment of AI computer code generators presents special challenges that are usually not typically experienced in traditional application testing. These challenges include:

Complexity regarding AI Models: The underlying models at the rear of AI code generator are highly complex, rendering it difficult in order to predict their habits under different circumstances. This complexity demands sophisticated testing strategies that can accurately assess performance around various scenarios.

Dynamic Nature of AI: AI models are constantly evolving through updates and re-training. Performance testing should be an ongoing procedure to ensure that each fresh version of typically the model maintains or perhaps improves performance with no introducing new issues.

Diverse User Inputs: AI code generator must handle the wide range of user inputs, by simple code thoughts to complex algorithms. Performance testing must account for this kind of diversity, ensuring the tool can constantly deliver high-quality program code across different make use of cases.

Resource Restrictions: Testing AI models, especially at scale, requires substantial computational resources. Balancing the need for thorough testing with the availability of resources can be a critical obstacle in performance testing.

Best Practices for Performance Testing in AI Code Generators

To effectively conduct functionality testing on AJE code generators, builders should follow greatest practices that handle the unique difficulties of AI systems:

Automated Testing Frames: Implement automated testing frameworks that may continuously evaluate the performance of AI code generators because they evolve. These types of frameworks should be capable of running a new wide range of test scenarios, which include load, stress, plus scalability tests.

Real-World Test Scenarios: Design test scenarios that reflect real-world use patterns. This contains testing the AI code generator along with diverse inputs, differing complexity levels, and under different load conditions to ensure this performs well in most situations.

Regular Supervising and Feedback Coils: Establish regular supervising of the AI code generator’s performance in production environments. Create feedback coils that allow builders to quickly identify and address performance issues as these people arise.

Scalability Assessment: Prioritize scalability tests to ensure typically the AI code power generator are designed for growth in user demand and even complexity without wreckage in performance.

Resource Optimization: Continuously screen and optimize the particular resource utilization involving AI code power generators to ensure they may be cost-effective and successful, especially in cloud environments.

Conclusion

Because AI code power generators become an crucial part of the software development scenery, the importance associated with performance testing are unable to be overstated. By simply ensuring these tools are accurate, efficient, scalable, and receptive, developers can unlock the full potential involving AI-driven coding although minimizing risks plus maximizing productivity. Because the technology carries on to evolve, on-going performance testing will be essential to maintaining the reliability and even effectiveness of AI code generators in real-world applications.